Publications

An up-to-date list is available on Google Scholar

2022

- Lumen: A Framework for Developing and Evaluating ML-Based IoT Network Anomaly DetectionIn CoNEXT, 2022

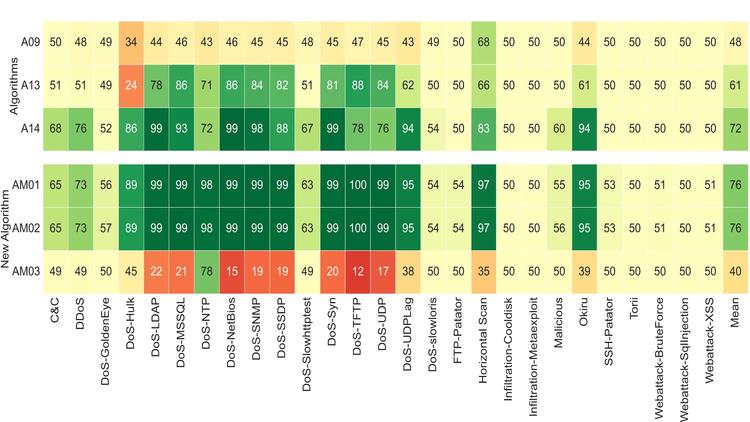

The rise of IoT devices brings a lot of security risks. To mitigate them, researchers have introduced various promising network-based anomaly detection algorithms, which oftentimes leverage machine learning. Unfortunately, though, their deployment and further improvement by network operators and the research community are hampered. We believe this is due to three key reasons. First, known ML-based anomaly detection algorithms are evaluated –in the best case– on a couple of publicly available datasets, making it hard to compare across algorithms. Second, each ML-based IoT anomaly-detection algorithm makes assumptions about attacker practices/classification granularity, which reduce their applicability. Finally, the implementation of those algorithms is often monolithic, prohibiting code reuse. To ease deployment and promote research in this area, we present Lumen. Lumen is a modular framework paired with a benchmarking suite that allows users to efficiently develop, evaluate, and compare IoT ML-based anomaly detection algorithms. We demonstrate the utility of Lumen by implementing state-of-the-art anomaly detection algorithms and faithfully evaluating them on various datasets. Among other interesting insights that could inform real-world deployments and future research, using Lumen, we were able to identify what algorithms are most suitable to detect particular types of attacks. Lumen can also be used to construct new algorithms with better performance by combining the building blocks of competing efforts and improving the training setup.

- Lumos: Identifying and Localizing Diverse Hidden IoT Devices in an Unfamiliar EnvironmentIn USENIX Security, 2022

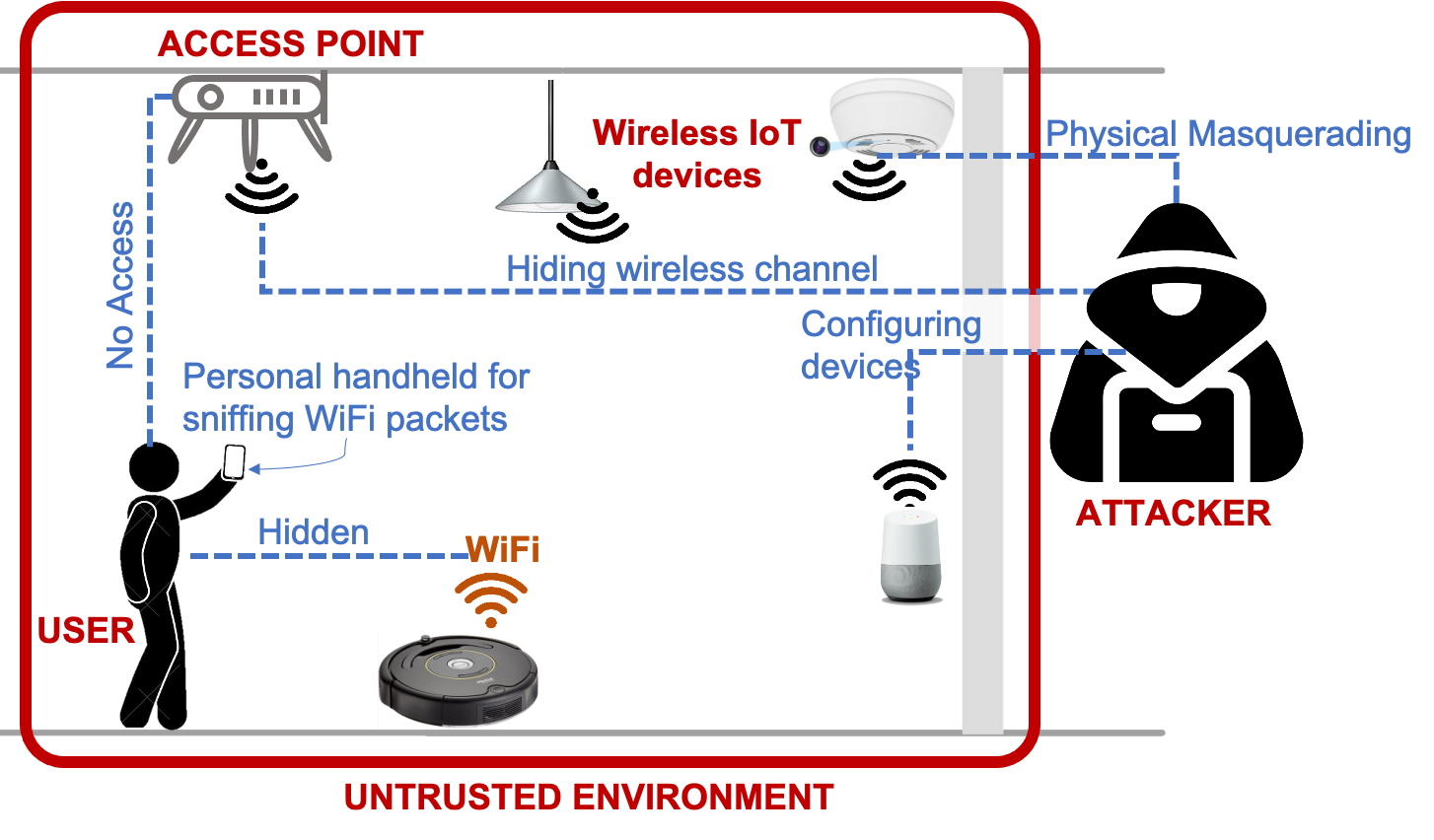

Hidden IoT devices are increasingly being used to snoop on users in hotel rooms or AirBnBs. We envision empowering users entering such unfamiliar environments to identify and locate (e.g., hidden camera behind plants) diverse hidden devices (e.g., cameras, microphones, speakers) using only their personal handhelds. What makes this challenging is the limited network visiibility and physical access that a user has in such unfamiliar environments, coupled with the lack of specialized equipment. This paper presents Lumos, a system that runs on commmodity user devices (e.g., phone, laptop) and enables users to identify and locate WiFi-connected hidden IoT devices and visualize their presence using an augmented reality interface. Lumos addresses key challenges in: (1) identifying diverse devices using only coarse-grained wireless layer features, without IP/DNS layer information and without knowledge of the WiFi channel assignments of the hidden devices; and (2) locating the identified IoT devices with respect to the user using only phone sensors and wireless signal strength measurements. We evaluated Lumos across 44 different IoT devices spanning various types, models, and brands across six different environments. Our results show that Lumos can identify hidden devices with 95% accuracy and locate them with a median error of 1.5m within 30 minutes in a two-bedroom, 1000 sq. ft. apartment

2021

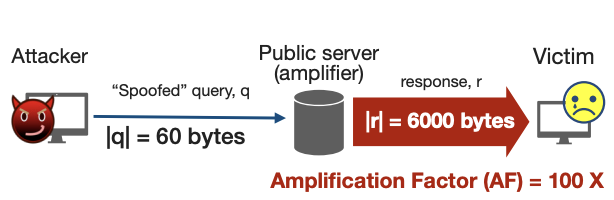

- Accurately Measuring Global Risk of Amplification Attacks using {AmpMap}Soo-Jin Moon, Yucheng Yin, Rahul Anand Sharma, Yifei Yuan, Jonathan M Spring, and 1 more authorIn USENIX Security, 2021

2020

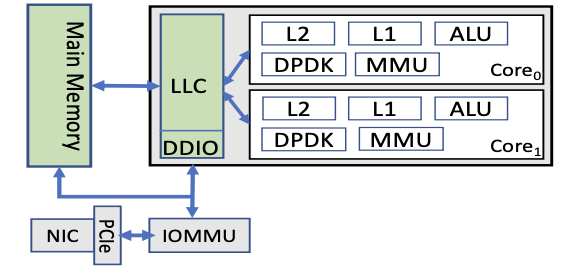

- Contention-aware performance prediction for virtualized network functionsAntonis Manousis, Rahul Anand Sharma, Vyas Sekar, and Justine SherryIn SIGCOMM, 2020

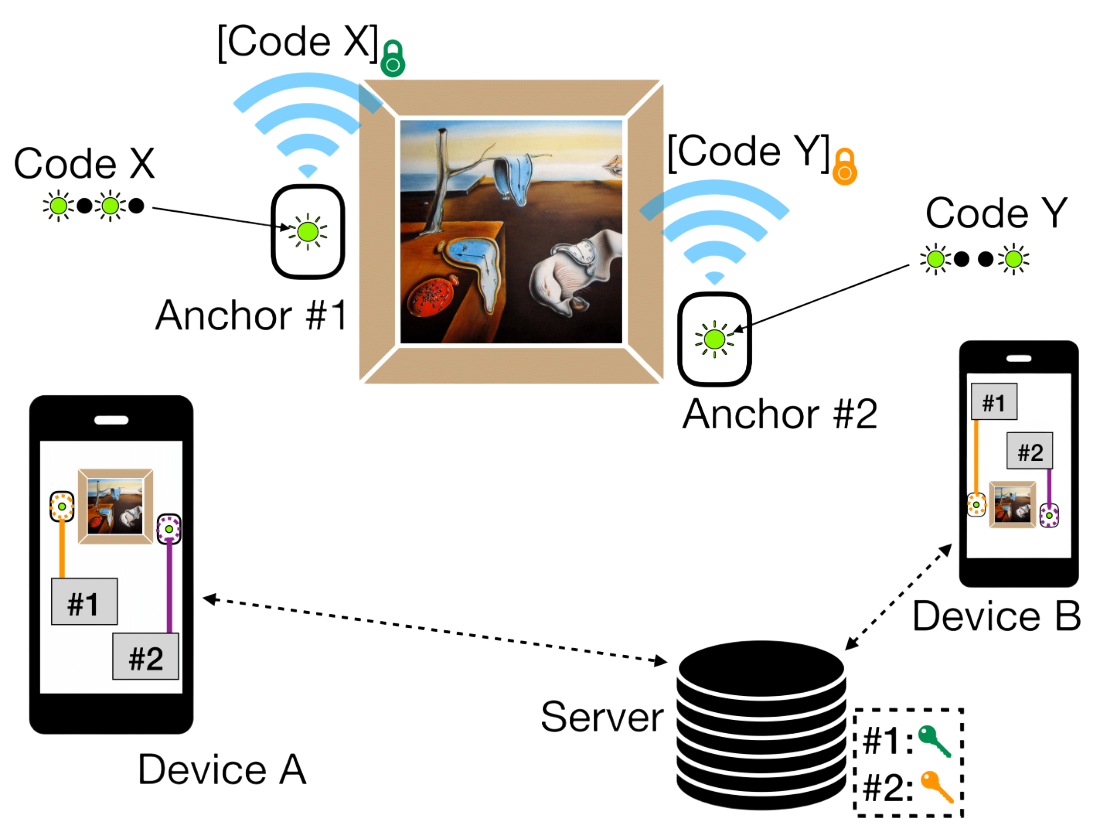

- All that glitters: Low-power spoof-resilient optical markers for augmented realityRahul Anand Sharma, Adwait Dongare, John Miller, Nicholas Wilkerson, Daniel Cohen, and 3 more authorsIn IPSN, 2020

One of the major challenges faced by Augmented Reality (AR) systems is linking virtual content accurately on physical objects and locations. This problem is amplified for applications like mobile payment, device control or secure pairing that requires authentication. In this paper, we present an active LED tag system called GLITTER that uses a combination of Bluetooth Low-Energy (BLE) and modulated LEDs to anchor AR content with no a priori training or labeling of an environment. Unlike traditional optical markers that encode data spatially, each active optical marker encodes a tag’s identifier by blinking over time, improving both the tag density and range compared to AR tags and QR codes.We show that with a low-power BLE-enabled micro-controller and a single 5 mm LED, we are able to accurately link AR content from potentially hundreds of tags simultaneously on a standard mobile phone from as far as 30 meters. Expanding upon this, using active optical markers as a primitive, we show how a constellation of active optical markers can be used for full 3D pose estimation, which is required for many AR applications, using either a single LED on a planar surface or two or more arbitrarily positioned LEDs. Our design supports 108 unique codes in a single field of view with a detection latency of less than 400 ms even when held by hand.

- Robust and practical WiFi human sensing using on-device learning with a domain adaptive modelElahe Soltanaghaei, Rahul Anand Sharma, Zehao Wang, Adarsh Chittilappilly, Anh Luong, and 4 more authorsIn BuildSys, 2020

2019

- Low-cost aerial imaging for small holder farmersAN Swamy, Akshit Kumar, Rohit Patil, Aditya Jain, Zerina Kapetanovic, and 6 more authorsIn ACM COMPASS, 2019

2018

- Automated top view registration of broadcast football videosRahul Anand Sharma, Bharath Bhat, Vineet Gandhi, and CV JawaharIn WACV, 2018

In this paper, we propose a novel method to register football broadcast video frames on the static top view model of the playing surface. The proposed method is fully automatic in contrast to the current state of the art which requires manual initialization of point correspondences between the image and the static model. Automatic registration using existing approaches has been difficult due to the lack of sufficient point correspondences. We investigate an alternate approach exploiting the edge information from the line markings on the field. We formulate the registration problem as a nearest neighbour search over a synthetically generated dictionary of edge map and homography pairs. The synthetic dictionary generation allows us to exhaustively cover a wide variety of camera angles and positions and reduce this problem to a minimal per-frame edge map matching procedure. We show that the per-frame results can be improved in videos using an optimization framework for temporal camera stabilization. We demonstrate the efficacy of our approach by presenting extensive results on a dataset collected from matches of football World Cup 2014.

- Fall-curve: A novel primitive for IoT Fault Detection and IsolationTusher Chakraborty, Akshay Uttama Nambi, Ranveer Chandra, Rahul Sharma, Manohar Swaminathan, and 2 more authorsIn SenSys, 2018

- ArxivLearnability of learned neural networksRahul Anand Sharma, Navin Goyal, Monojit Choudhury, and Praneeth Netrapalli2018

This paper explores the simplicity of learned neural networks under various settings: learned on real vs random data, varying size/architecture and using large minibatch size vs small minibatch size. The notion of simplicity used here is that of learnability i.e., how accurately can the prediction function of a neural network be learned from labeled samples from it. While learnability is different from (in fact often higher than) test accuracy, the results herein suggest that there is a strong correlation between small generalization errors and high learnability. This work also shows that there exist significant qualitative differences in shallow networks as compared to popular deep networks. More broadly, this paper extends in a new direction, previous work on understanding the properties of learned neural networks. Our hope is that such an empirical study of understanding learned neural networks might shed light on the right assumptions that can be made for a theoretical study of deep learning

2017

- Automatic analysis of broadcast football videos using contextual priorsRahul Anand Sharma, Vineet Gandhi, Visesh Chari, and CV JawaharSignal, Image and Video Processing, 2017

The presence of standard video editing practices in broadcast sports videos, like football, effectively means that such videos have stronger contextual priors than most generic videos. In this paper, we show that such information can be harnessed for automatic analysis of sports videos. Specifically, given an input video, we output per-frame information about camera angles and the events (goal, foul, etc.). Our main insight is that in the presence of temporal context (camera angles) for a video, the problem of event tagging (fouls, corners, goals, etc.) can be cast as per frame multiclass classification problem. We show that even with simple classifiers like linear SVM, we get significant improvement in the event tagging task when contextual information is included. We present extensive results for 10 matches from the recently concluded Football World Cup, to demonstrate the effectiveness of our approach.

2016

2015

- Fine-grain annotation of cricket videosRahul Anand Sharma, K Pramod Sankar, and CV JawaharIn ACPR, 2015

The recognition of human activities is one of the key problems in video understanding. Action recognition is challenging even for specific categories of videos, such as sports, that contain only a small set of actions. Interestingly, sports videos are accompanied by detailed commentaries available online, which could be used to perform action annotation in a weakly-supervised setting. For the specific case of Cricket videos, we address the challenge of temporal segmentation and annotation of actions with semantic descriptions. Our solution consists of two stages. In the first stage, the video is segmented into “scenes”, by utilizing the scene category information extracted from text-commentary. The second stage consists of classifying video-shots as well as the phrases in the textual description into various categories. The relevant phrases are then suitably mapped to the video-shots. The novel aspect of this work is the fine temporal scale at which semantic information is assigned to the video. As a result of our approach, we enable retrieval of specific actions that last only a few seconds, from several hours of video. This solution yields a large number of labelled exemplars, with no manual effort, that could be used by machine learning algorithms to learn complex actions.